Speech Bubble Experiment

· 3 min read

Why

Closed captions have been around for awhile its time to try to innovate on them.

What

Speech bubbles are an experimental way to display what is commonly known as captions or subtitles. Using results from multiple AI services we can programmatically add speech bubbles to videos. This is a super rough poc where the intent is to just display what is possible.

Video

todo: The bubble jerkiness can be fixed, a smooth elastic like bubble movement would be ideal

How

Taking the results from ContentAI extractors aws_transcribe, aws_closed_captions and aws_faces and run them through the Box2d physics engine.

ContentAI Extractors

- aws_transcribe: Converts speech to text.

- aws_closed_captions(experiment): Takes the results from the aws_transcribe extractor and creates .srt closed caption file.

- aws_faces: Detect bounding box, attributes, emotions, landmarks, quality, and the pose for each face.

Box2d

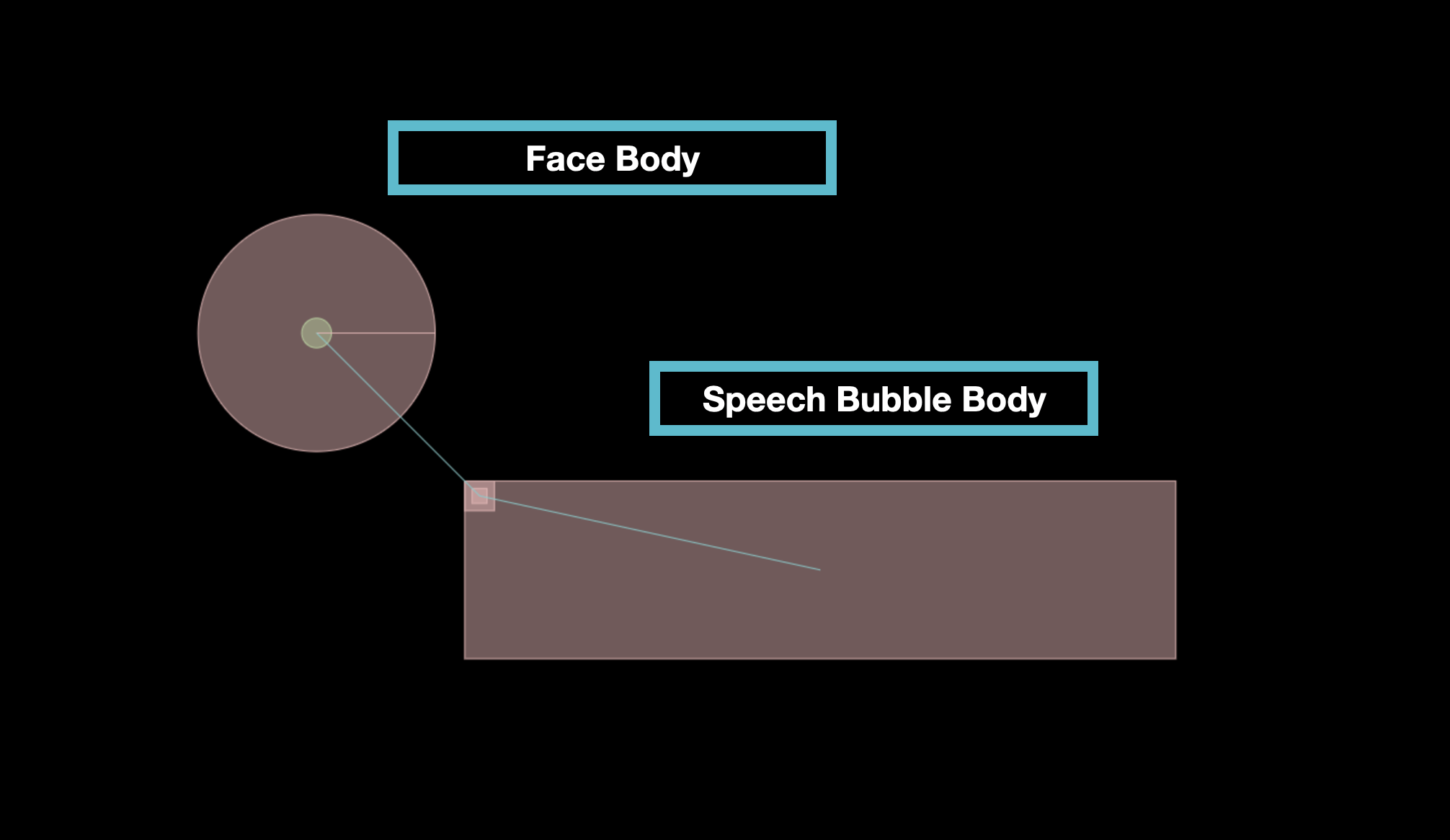

Attach physics objects to characters faces.

- Face Body - attach face body to characters face. Five frames a second, we take the position from

aws_facesand update our physics face body. - Speech Bubble Body - we render the caption text onto the bubble body

- Fixed Revolute Joint - we are connecting the face and speech bubble bodies with a joint. There are various types of Box2D joints, but for this poc will try to keep the two bodies equal distance apart using a revolute joint. As we update the position of the face body with the results from ContentAI the speech bubble bodies position is updated by the physics engine.

Future Ideas

- Customize bubble - give brands and franchises the ability to customize their bubbles.

- Multiple language support - our extractors can translate up to 32 different languages for global reach.

- Modify text size - based on the characters emotion we can alter the text/font of individual words.

- Highlight spoken word - with the millisecond granularity we can highlight words in the speech bubble as they are spoken.

- Typewriter effect - with the millisecond granularity we can append the next word to the end of the line as it's being spoken.

- Multiple speaker support - the experiment only supports one face.

- Enhance bubble movement - the current bubble is limited in movement and is jerky. We can improve all current shortcomings.

Use Cases

- Social media clips - viewers tend to scroll past stuff within 3-5 seconds and typically have the audio turned off, speech bubbles could help grab their attention.

- Top Player Support - we own the video player our customer facing products use, we could add speech bubbles to our player.